Many developers have encountered the terms OIDC, SAML and OAuth 2.0. However, hearing about them and knowing what they are, as well as when to use them, are different things.

For instance, can you say which are protocols primarily intended for Authorization and which should be used for Authentication? Which protocols only support Browser-based workflows and which provide other workflow mechanisms, too? Which produces a JWT and which produces an Assertion?

Whilst I don’t necessarily disagree with @JustDeezGuy (below) — particularly the part “…and you shouldn’t rely on third-party SDKs…” — Auth, with all its various permutations and combinations, isn’t exactly as easy as @Ashref might lead folks to believe. Read my Build, Buy or DIY post if you want to know more about my thoughts on the matter.

I do, however, concur with @JustDeezGuy’s follow-up comment, which says “…avoid both nonconformance and vendor-specific features, both of which introduce significant risk as well as defeating the purpose of standardisation in the first place.” Because that’s largely the problem: vendor-specific implementations quite frequently are nonconformant, often because there’s a lot of confusion around even the standards specs, which makes it hard for implementers to make the right choices.

My name’s Peter Fernandez, and in this article, I’m going to discuss some of the various aspects regarding the specifications associated with modern security protocols.

OAuth 2.0

Let’s start with Open Authorization 2.0 (a.k.a. OAuth 2.0). OAuth 2.0 was first introduced in RFC 6749, which was published in late 2012. It was introduced as a direct replacement for its predecessor, OAuth 1.0, which was obsoleted due to various security/operational issues.

OAuth 2.0 is an Authorization protocol, specifically one intended to primarily deal with Delegated Authorization, as opposed to anything else.

What is Delegated Authorization, you may ask? Well, authorisation has been around as long as authentication (arguably longer), and most know it as a function of access control — as in, what someone is allowed to do based on policy and permission. Delegated authorisation complements this by including the ability for a user to consent to what an application can access on their behalf.

In essence, Delegated Authorization refers to the situation where an authenticated User consents to the access of specific information, on their behalf, thus delegating authorisation to some (third-party) application.

Let me elaborate. At that time, SAML was the protocol of choice for modern Browser-based application Authentication, particularly in an Enterprise environment. SAML isn’t used for authorisation, and wasn’t the only authentication protocol being used either; authentication in an application context was still being performed using LDAP, explicit UserID/Password validation, and even the likes of Kerberos and Radius. However, SAML introduced a new standard for user authentication and, essentially, SSO was born.

Around the same time, the rise of the public-facing Web API (accessed using the HTTP protocol) was giving developers the ability to build rich applications, supporting system expansion at an unprecedented rate.

With these Web APIs enabling the likes of mobile apps, single-page applications, etc, new security and security-related challenges were emerging. For web APIs — or just APIs as we now call them; the term SDK is now typically used for what was arguably the classic definition of an API, but I digress — secure access is an important factor, and the only mechanisms then available for that were Basic Authentication or an API Key.

Authorisation is pretty much predicated on an authenticated context being established. For authorised API access, Basic Authentication essentially provides the ID — e.g. the UserID — and the Password of the accessing party for validation (against some known credentials).

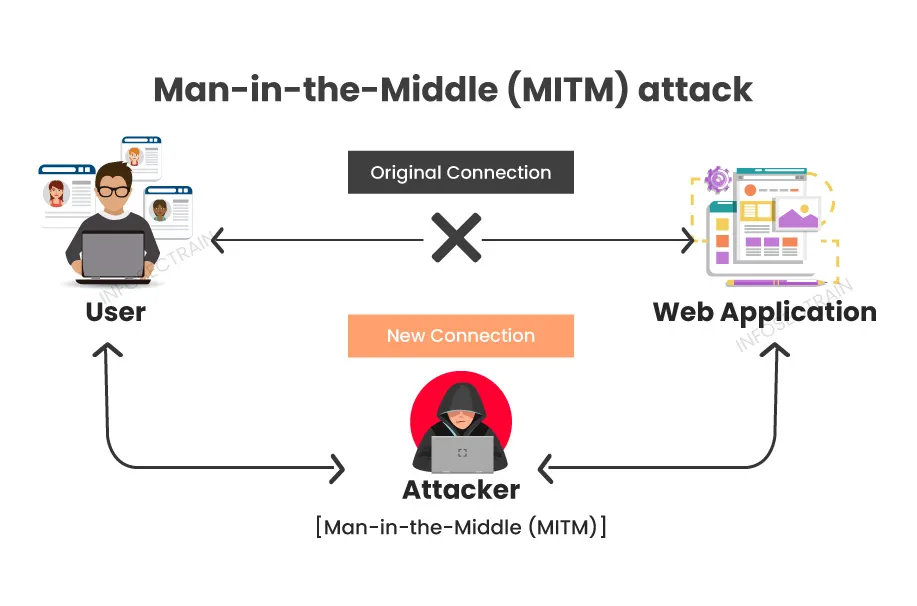

Whilst HTTPS provides a mechanism for the secure transport of an ID and Password, those ID and Password credentials end up being handled by a number of different parties and potentially exposed to all sorts of vulnerabilities.

Further, the ubiquitous use of HTTPS was still in its infancy, and anyway, who could say that the API being called wasn’t created by some malicious third party intent on stealing those vulnerable credentials?

The use of an API typically involves a RESTful process. REST (a.k.a. Representational State Transfer) does not make use of a session context for communication. Ergo, any and every REST request must carry enough information to establish an authenticated context.

An API key gets around credential vulnerability by using an opaque “token” — i.e. something akin to a GUID (Globally Unique Identifier), that’s representative of credentials rather than being the actual credentials themselves.

However, an API Key is typically not issued for a specific entity. API Keys are notoriously difficult to manage, and as an API Key is often used by more than one accessing party, it’s almost impossible to tell what actually made the API call and, more importantly, on whose behalf.

Additionally, there’s no real way with either Basic Authentication or an API Key for a user to consent to an application accessing information (on their behalf). With regulatory compliance, such as modern GDPR, in its infancy, that wasn’t necessarily an immediate issue, but it was going to become a challenge.

Remember, too, that an API (also known as a Resource Server in OAuth 2.0) typically provides access to resources either owned or managed by the entity on whose behalf the API is being called. Calling an API involves the use of HTTP — or more specifically HTTPS — but doesn’t involve the use of a Browser. Hence, there’s no mechanism for a user to interactively provide consent, or anything else, as part of the API calling process.

The solution to the problem became OAuth 2.0. OAuth 2.0 introduced concepts that would enable authorised access to resource information, via an API, on behalf of whatever entity was using the application (typically a User).

Many folks are under the impression that OAuth 2.0 replaces SAML, the use of a UserID/Password, or any other authentication mechanism for that matter. It doesn’t. In fact, it complements it: an OAuth 2.0 Authorization Server — the thing that mints an Access Token, a.k.a. Bearer Token — leverages whatever authentication protocol is available to establish a secure and authenticated context in which the token can be created for (delegated) authorisation use.

Access (Bearer) Tokens

At the heart of OAuth 2.0 lies the Access Token. An Access Token is a security artefact that essentially acts like an API Key, but one that’s dynamically generated in the context of the entity (typically a User) on behalf of whom an API is being accessed.

Access Tokens have a specific lifetime and are typically managed by an OAuth 2.0 Authorization Server implementation. Lifetime is an important addition because, unlike with an API Key, an Access Token can expire and effectively become obsolete.

Third-party SaaS Authorization Servers like Auth0 express the Access Token in JWT form, but the actual OAuth 2.0 standard does not prescribe any particular format. Consequently, this can create confusion when developers think about a “JWT” and when it should be used. I’ll talk more about that in the next section (below).

To add to the confusion, Auth0 also uses the scope claim in a JWT to express the consent provided by a User together with the impact of any Role Based Access Control (RBAC). Whilst scope is defined as part of RFC 9068 (the JWT Profile for OAuth 2.0 Access Tokens), arguably, its meaning differs between OAuth 2.0, OIDC, and how Auth0 treats it.

Minting an Access Token is handled by the Authorization Server, and typically involves a protocol “dance” similar to that illustrated below, known as Authorization Code Flow.

Explaining that dance, as well as some of the other available flows, is beyond the scope of this article, suffice it to say that once the Access Token is created, it’s used by the application as part of the call to the API; the token is typically supplied as the Bearer token in the API call:

The diagram above illustrates Authorization Code Flow with PKCE, a variation using the best-practice Proof Key for Code Exchange (PKCE) mechanism, mandatory for public clients and recommended generally to mitigate MITM attacks.

An application will typically hold on to an Access Token for use in an API call, but it should never be tempted to look at its contents, irrespective of whether it’s in a JWT form or not. An OAuth2.0 Access Token should only ever be consumed by the API (audience) for which it was intended!

OIDC

Having established that OAuth 2.0 is used for Authorization and is complementary to any Authentication mechanism, let’s turn our attention to Open ID Connect — a.k.a. OIDC.

OIDC is based on the OAuth 2.0 protocol and is designed as a modern authentication workflow, intended as a direct replacement for SAML (or anything else for that matter).

Originally designed for Enterprise environments — as in authentication for applications used within a company or corporate organisation — SAML is quite a complicated protocol. It typically relies on established trust relationships and employs the use of an XML format security artefact structure, typically referred to as an Assertion.

Whilst SAML still has a very valid place in modern authentication architecture (and its introduction was an important milestone, particularly in the development of SSO) in the world of B2C and B2B SaaS, it’s too cumbersome to use in the various and numerous scenarios you typically find with those environments.

For starters, XML is quite heavyweight when it comes to wire transfer, and processing it can be quite application-intensive, too. Add to the mix that establishing and maintaining explicit trust relationships between the various and disparate systems would be an absolute nightmare, and you can quickly see why SAML doesn’t fly.

SAML, however, is better than its predecessors — e.g. explicit UserID/Password credential validation or the use of LDAP — and its development pioneered important advances for authentication…just as the development of OAuth 2.0 did for (delegated) authorisation.

Based on learnings from the development of OAuth 2.0, together with the pioneering advancements from SAML, OIDC was born. Introduced in 2014, the OpenID Connect 1.0 Specification — which remains the core working specification today — defines a simple identity layer on top of the OAuth 2.0 protocol specification to provide for modern application authentication.

OIDC isn’t built on the OAuth 2.0 protocol per se — as in it doesn’t require an OAuth 2.0 implementation to work — rather, the architecture of its protocol is derived from that of OAuth 2.0; the Authorization Code Flow “dance” described above, at least from a Browser perspective, is pretty much identical.

ID Tokens

In a similar fashion to OAuth 2.0, at the heart of OIDC lies a security artefact known as the ID Token. An ID Token is explicitly defined to be a secure JWT artefact, containing a number of well-defined standard attributes, also known as claims. The ID Token takes the place of the Assertion used in SAML and provides an application with an indication of a user’s authenticated state.

Unlike an OAuth 2.0 Access Token, an OIDC ID Token is absolutely intended for application consumption, with the secured claims it contains easily providing the information required to inform the user interface/user experience.

Whilst an ID Token should always be expressed in JWT format, it should never be used to call an API — even if that API can process JWT format tokens as per RFC 9068. Whilst similarly named, many of the claims contained in an ID Token serve a subtly different purpose from the ones in an Access Token.

As with SAML, all of the vulnerabilities associated with credential handling and management are handled by what is typically referred to as the Identity Provider (or IdP for short) — and via the incorporation of aspects from OAuth 2.0, the OIDC architecture can provide profile information about a User that can also be obtained in an interoperable and REST-like manner.

SAML

I’m not going to go into too much detail regarding Security Assertion Markup Language (i.e. SAML for short) in this article; suffice to say that SAML is arguably the father of modern Identity and Access Management. I’ve already highlighted some aspects of SAML and how they have helped to influence the development of both OIDC and OAuth 2.0, and the diagram below shows how SAML flow helped influence the aforementioned “dance” that’s part of OIDC and OAuth 2.0, as well as highlighting some notable differences:

On the face of it, the SAML protocol workflow seems far simpler than with OIDC (which is based on OAuth 2.0). However, SAML requires a certificate-based trust relationship as a prerequisite, making use in Social scenarios and the like practically impossible.

SAML and OIDC are essentially mutually exclusive, as in an application will typically make use of one or the other but not both at the same time. However, both are mutually inclusive when it comes to OAuth 2.0; no matter which one of SAML or OIDC an application uses, OAuth 2.0 is always a valid complement when it comes to accessing APIs.

One thing I will say is that the use of OIDC in Enterprise environments is becoming more prolific, partly driven by the increasing boom in the B2B SaaS market. It’s not replacing SAML exactly, but it does mean that when it comes to things like SSO, the need to cater for interop — where there are multiple applications, each using either SAML or OIDC — makes the notion of building your own solution that much more difficult. For more on that, see my article entitled

Assertion

I will spend a moment on the topic of the SAML Assertion, really to highlight how validating a security artefact replaces validating credentials, and how this fundamentally changes what an application needs to do when it comes to authentication in a modern environment.

The introduction of SAML redefined the workflow for authentication and opened up the ability for secure application interop, at least from an identity perspective. The SAML Assertion was a fundamental part of that, by defining a way for something representative of an authentication state to be shared and exchanged without the need for exposing credentials.

With an Assertion, as with an ID Token and even an Access Token, the consumer — i.e. the Application or the API — becomes responsible for validating and subsequently consuming a security artefact, blissfully ignorant of whatever credentials were used to validate the creation of the artefact or, indeed, the supplemental mechanisms (such as MFA) that were employed as part of its creation.

Unlike with an ID Token, a SAML Assertion is more difficult to handle, as an XML document is more heavyweight than its lighter-weight JWT counterpart.

Leave a Reply